What is Cloud Performance Testing?

Cloud performance testing helps teams validate how an application behaves under real-world load conditions before it reaches end users. Unlike traditional setups, cloud-based environments offer on-demand scalability, cost efficiency, and geographic flexibility that legacy infrastructure cannot match.

Did you know that 94% of enterprises are already leveraging cloud services? As cloud adoption continues to soar, ensuring the performance of these environments becomes increasingly critical.

This blog will explore why cloud performance testing is essential for your business and how it can help you achieve scalability and reliability in your cloud infrastructure.

Why is Cloud Performance Testing Important?

Customer Satisfaction:

- Swift and seamless user experience is paramount in today’s digital landscape.

- Performance issues like slow load times or unresponsive interfaces can drive customers away.

- Cloud performance testing ensures you identify and fix potential bottlenecks before they affect users, enhancing satisfaction and retention.

Cost Efficiency:

- Efficient resource utilization is crucial for cost savings in cloud infrastructure.

- Performance optimization helps in identifying underutilized resources and unnecessary costs.

- By fine-tuning performance, businesses can reduce operational expenses and maximize ROI on their cloud investments.

Business Continuity:

- Downtime can be detrimental to revenue and reputation.

- Cloud performance testing proactively identifies potential points of failure.

- Implementing reliability measures such as failover mechanisms and disaster recovery plans ensures uninterrupted operations, even in adverse conditions.

What Are the Benefits of Cloud Performance Testing?

Cloud performance testing assesses how applications function in a cloud environment. Applications must handle fluctuating traffic, sustain performance under stress, and recover from failures quickly. Teams that skip this step often pay the price through unplanned downtime and a poor user experience.

Here’s why businesses are making the shift:

-

Scalable test environments: Teams can instantly spin up thousands of virtual users without investing in physical hardware. This makes it easy to simulate sudden traffic spikes with precision.

-

Faster time-to-test: Tests can be configured and executed in hours, not days. This accelerates release cycles and keeps CI/CD pipelines moving.

-

Cost-effective execution: You pay only for the compute resources you use. There’s no overhead from maintaining dedicated test infrastructure.

-

Geo-distributed testing: Teams can simulate user traffic from multiple regions. This helps detect latency and performance issues across global audiences.

-

Seamless CI/CD integration: Performance tests embed directly into your DevOps pipeline. This enables continuous, automated validation at every release stage.

-

Accurate production parity: Cloud environments closely mirror production setups. This leads to more reliable and actionable test results.

-

Faster root cause analysis: Built-in monitoring tools surface bottlenecks like slow APIs or database queries in real time.

Of course, performance testing delivers its full value only when the underlying application is built for the cloud. Teams that invest in cloud-native architecture from the start consistently see faster test cycles, fewer bottlenecks, and more actionable results. Mindfire’s Cloud Application Development Services combine cloud-native architecture with performance-first development practices refined across 25+ years of enterprise software delivery. The result is applications that are easier to test, faster to scale, and more resilient under real-world traffic demands.

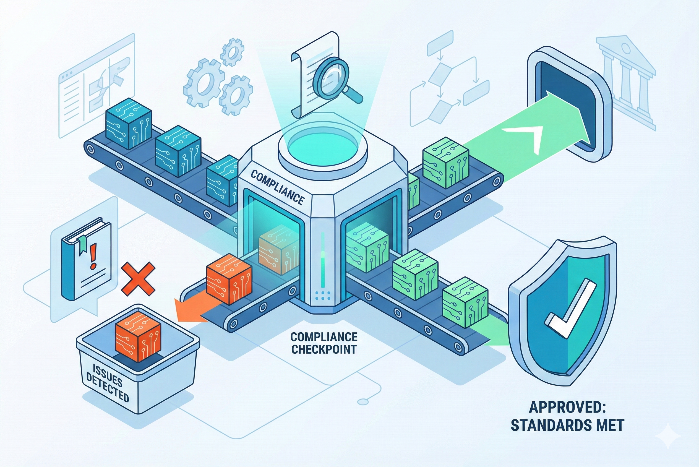

What are the Types of Cloud Performance Testing?

Load Testing: Simulating User Traffic

- In Cloud Environments: Utilize cloud-based load testing tools to simulate realistic user traffic scenarios.

- Purpose: Measure how your system performs under expected peak loads, ensuring it can handle typical usage without degradation.

Stress Testing: Pushing the System to its Limits

- In Cloud Environments: Increase the load beyond normal capacity to determine the breaking point.

- Purpose: Identify performance bottlenecks and weaknesses in your system, ensuring it can withstand unexpected spikes in traffic or resource demands.

Spike Testing: Testing Sudden Increases in Load

- In Cloud Environments: Introduce rapid and significant load increases to assess system response.

- Purpose: Evaluate how your system scales dynamically to sudden surges in traffic, ensuring it can handle unexpected peaks without degradation.

Soak Testing: Evaluating Performance Over Extended Periods

- In Cloud Environments: Run prolonged tests to assess performance stability over time.

- Purpose: Detect memory leaks, performance degradation, or other issues that may arise during sustained periods of activity, ensuring long-term reliability.

Reliability Testing: Ensuring Systems Can Recover from Failures

- In Cloud Environments: Introduce controlled failures to test system recovery mechanisms.

- Purpose: Validate the effectiveness of redundancy, failover, and disaster recovery strategies, ensuring your system can maintain uptime and data integrity in the event of failures or disruptions.

What are the Best Tools for Cloud Performance Testing?

Monitoring Tools: Prometheus, Grafana

- In Cloud Environments: Prometheus and Grafana are highly compatible with cloud platforms like AWS, Azure, and Google Cloud.

- Purpose: These tools allow for comprehensive monitoring of cloud infrastructure, providing real-time insights into performance metrics such as CPU usage, memory consumption, network traffic, and more. Grafana’s visualization capabilities enhance data analysis, enabling proactive identification of performance issues and optimization opportunities.

Load Testing Tools: Apache JMeter, LoadRunner

- In Cloud Environments: Both Apache JMeter and LoadRunner can be seamlessly integrated into cloud environments using scalable infrastructure.

- Purpose: These tools facilitate load testing by simulating user traffic and generating load on cloud-based applications. By accurately replicating real-world scenarios, they help assess how cloud systems perform under different levels of demand, ensuring scalability and reliability.

Chaos Engineering Tools: Chaos Monkey, Gremlin

- In Cloud Environments: Chaos Monkey and Gremlin are designed to work within cloud-native architectures, leveraging cloud services for controlled chaos experiments.

- Purpose: These tools enable chaos engineering practices in the cloud, allowing organizations to intentionally inject faults and disruptions into their systems to test resilience and fault tolerance. By simulating failures in a controlled environment, they help identify weaknesses and strengthen cloud infrastructure’s reliability and availability.

Best Practices for Cloud Performance Testing

1. Define Clear Obejctives

- What to Do: Establish specific performance goals and key performance indicators (KPIs).

- How to Do It: Identify metrics such as response time, throughput, and resource utilization that need to be measured.

2. Use Realistic Scenarios

- What to Do: Ensure testing environments closely mimic production conditions.

- How to Do It: Replicate production configurations, data sets, and user behaviors in your testing setup.

3. Automate Testing

- What to Do: Integrate performance testing into your development workflow.

- How to Do It: Use CI/CD pipelines to run automated performance tests with every code change.

4. Monitor Continuously

- What to Do: Keep track of system performance in real-time.

- How to Do It: Utilize monitoring tools like Prometheus and Grafana to set up dashboards and alerts for ongoing performance tracking.

5. Plan for Failures

- What to Do: Ensure your system can recover quickly from disruptions.

- How to Do It: Develop disaster recovery plans and use chaos engineering tools like Chaos Monkey to test your system’s resilience.

Conclusion

Ultimately, cloud performance testing is about confidence. Confidence that your application can handle real traffic, real users, and real-world conditions. Building that confidence requires the right tools, the right environment, and a consistent testing discipline at every stage of development.

Organizations that build performance testing into their development process early are better positioned to scale confidently. This applies not just to application layers but also to the database layer. Explore how database performance testing works to build a more complete picture of end-to-end performance validation.

Partner With Expert Software Testers Who Deliver Results

At Mindfire, we are experts in ensuring your cloud environment is scalable and reliable through comprehensive performance testing services. Our proven methodologies and use of industry-leading tools ensure your systems perform optimally, even under the most demanding conditions. We invite businesses to partner with us to enhance their cloud performance, reduce costs, and ensure uninterrupted operations. Contact us today to learn how we can help you achieve superior cloud performance.